機器人席捲網路 數位資產擁有者該如何應對

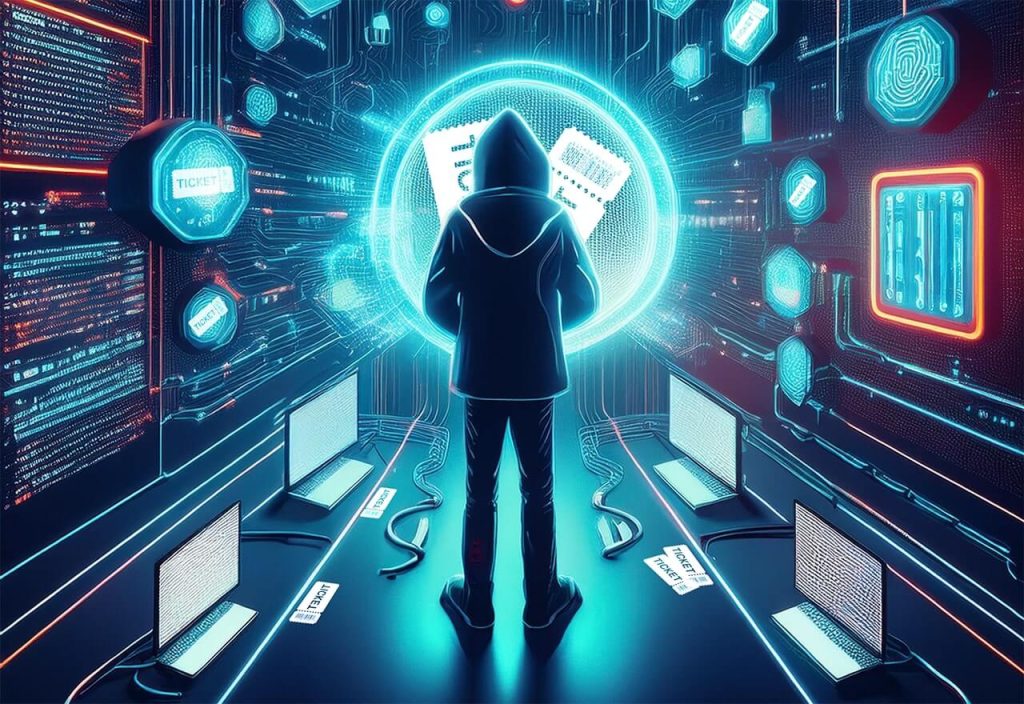

Have you ever checked your web traffic logs and wondered who all those mysterious visitors are? It turns out that a huge chunk of what looks like legitimate traffic is actually automated scrapers, literally bots armed with ever-smarter AI to vacuum up your articles, images, and even your readers and employees’ email addresses. A simple robots.txt file and basic rate limits cannot handle them. Today’s scrapers spin through thousands of IP addresses and mimic real human browsing so convincingly that basic defenses can’t tell them apart from genuine users. Content Scrapers 1.0: Old-School Tactics Have you ever checked your web traffic logs and wondered who all those mysterious visitors are? It turns out that a huge chunk of what looks like legitimate traffic is actually automated scrapers, literally bots armed with ever-smarter AI to vacuum up your articles, images, and even your readers and employees’ email addresses. A simple robots.txt file and basic rate limits cannot handle them. Today’s scrapers spin through thousands of IP addresses and mimic real human browsing so convincingly that basic defenses can’t tell them apart from genuine users. A simple robots.txt file and basic rate limits cannot handle them. Today’s scrapers spin through thousands of IP addresses and mimic real human browsing so convincingly that basic defenses can’t tell them apart from genuine users. Content Scrapers 2.0: AI Meets Data Theft Today’s Content Scrapers 2.0 don’t stop at copying headlines and article text. Rather, they harvest email lists, contact data, and other valuable assets you might not even realize you’re exposing. What began as lead-generation platforms like , and has morphed into versatile crawlers treating every site as a B2B goldmine. extensions such as and quickly adapted to mine publisher domains for leads. Under the hood, these bots run headless browsers that execute complex JavaScript, interpret dynamic layouts, and scroll infinite-feed sections to harvest every URL. When they hit a CAPTCHA, they offload it to human-in-the-loop farms or machine-vision solvers that crack challenges in seconds. By the time your defenses notice, they’ve already exfiltrated thousands of contacts under rotating IPs. They blend in with genuine users by randomizing request intervals, mimicking mouse movements, shuffling user-agent strings, and even spinning up mini-AI agents to choose which pages to scrape next thereby making them almost indistinguishable from real visitors without deep behavioral analytics. By the time your defenses notice, they’ve already exfiltrated thousands of contacts under rotating IPs. They blend in with genuine users by randomizing request intervals, mimicking mouse movements, shuffling user-agent strings, and even spinning up mini-AI agents to choose which pages to scrape next thereby making them almost indistinguishable from real visitors without deep behavioral analytics. As shown by the BBC’s June 2025 cease-and-desist to Perplexity AI—demanding it stop scraping BBC articles and deleting any cached copies—legal and licensing strategies are now as vital as technical defenses. Publishers and digital content owners alike will litigate to protect their IP just as they strengthen their server safeguards. Real-World Impact on Your Brand When bots treat your site as a quarry, the fallout hits both performance and perception. Traffic surges of five to ten times during major campaigns force you to overprovision servers or risk downtime, while scraped personal data such as emails and names can land you in hot water with GDPR, CCPA, or other privacy regulations. Meanwhile, duplicate content penalties become a drag on your search rankings and dilute your brand authority, starving you of the organic traffic you worked so hard to earn. Advanced Bot Tactics: From Fingerprints to AI Evasion Modern scrapers have grown adept at evading defenses. They spoof browser fingerprints to impersonate environments ranging from mobile Chrome in Taipei to desktop Firefox in Amsterdam. CAPTCHA challenges are outsourced to human-in-the-loop farms or defeated by advanced machine-vision solvers in seconds. Bots randomize mouse movements, scroll depths, and click patterns to sidestep anomaly detectors, effectively impersonating legitimate interactions and evading simple rule-based blocks. A Double-Edged Sword for Digital Marketers AI crawling can serve your marketing goals when wielded intentionally and thoughtfully. By using your own GenAI-powered tools to crawl your proprietary site data—product specs, case studies, whitepapers, to name a few—you ensure AI-generated chatbots and summaries draw on the freshest, most accurate content, reinforcing brand consistency and indirectly boosting SEO. Meanwhile, you must manage external crawlers to protect your assets search engines and trusted partners should index your material, but competitors or rogue AI services must be blocked. Precise, behavior-based policies let in “good” AI crawlers while keeping unwanted bots at bay, safeguarding both your assets, infrastructure, and brand reputation. AI-Powered Behavioral Fingerprinting and Standards-Based Defense Outdated defenses won’t suffice against today’s sophisticated bots. You need an AI-driven solution that fingerprints every visitor by combining client-side signals with server-side analytics. A dynamic bot management engine should be deployed to throttle or block malicious crawlers in real time. At the same time, adopting the IETF AI Preferences specification gives you a machine-readable framework to declare how AI systems may use your content. Only this behavior-based, standards-aligned approach can accurately distinguish genuine readers from impersonators and reclaim control over who accesses, indexes, and repurposes your digital assets. How IntelliFend Manages These Bots IntelliFend embodies this balanced strategy with its multilayered engine that fuses advanced fingerprinting with real-time behavioral analytics to detect even the most human-like bots, while its adaptive policy framework enforces new rules instantly without disrupting legitimate traffic. Full support for IETF AI Preferences ensures your published content usage directives are honored at scale. Intuitive dashboards with automated alerts provide your marketing and security teams with the visibility and control they need. Turning the Tables on AI Crawlers AI crawling is a double-edged sword for digital marketers. You want your own AI tools to learn from your content, but you must control who else can access it. By pairing in-house AI crawling with precise, behavior-based bot management, you can harness AI’s power for brand growth while keeping unwanted scrapers, as well as the risks that come along with, firmly at bay. AI

機器人席捲網路 數位資產擁有者該如何應對 Read More »